Blog

Open Source AI Agents Are Exciting. But Unchecked Openness Comes With Real Security Costs

Open source AI agents are exciting. Moltbot went from weekend project to global phenomenon in months—and now Moltbook, a social network where agents talk to agents, no humans required.

Root team

The Root team

Published :

Feb 2, 2026

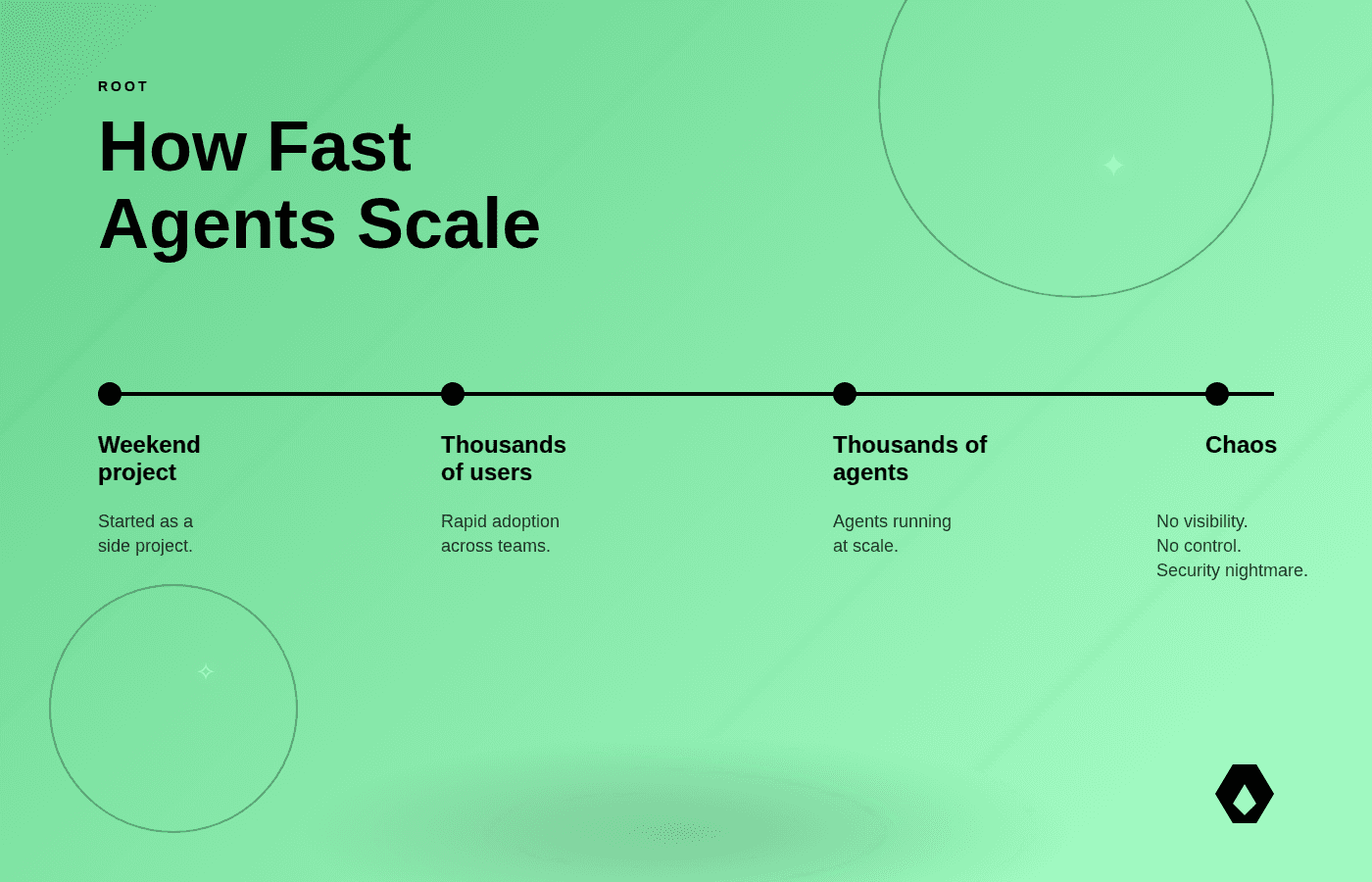

Moltbot went from weekend project to global phenomenon in months. Tens of thousands of users. Thousands of autonomous agents. And now Moltbook: a social network where AI agents interact with each other, no humans required.

It's part of a broader pattern. Stanford's AI Index, OWASP's ML Security framework, and NIST's AI Risk Management guidance all point to the same challenge: as AI agents become more autonomous and interconnected, traditional security models break down. Moltbot isn't an outlier; it's an early warning.

The same dynamics that made Moltbot successful (open source, easy adoption, autonomous behavior) are exactly what make it risky at scale. At Root, we run thousands of specialized AI agents in production to remediate open-source vulnerabilities. We've learned what works when deploying autonomous AI safely.

Here's what we're seeing.

Three Hazards That Matter

Hazard | What happens | What to do |

|---|---|---|

Supply chain risk & fake versions | Open source draws attackers. Fake Moltbot versions spread as malware-bearing VS Code extensions. Users assume "open source = safe." | Require repo signing and verification. Track provenance. Maintain approved lists. |

Deep system access without controls | Moltbot runs locally with access to file systems, credentials, env vars—everything. On a personal machine, that's passwords, email, 2FA, banking. Anyone who compromises the agent gets it all. | Never run agents with direct access to your personal machine. Use VMs, containers, or sandboxed systems only. Least-privilege access. Log everything. |

Agents talking to agents, no humans | Moltbook = agent-to-agent social network. Emergent behavior: coordination without validation, behaviors drifting. Unpredictable risk. | Build guardrails from the start. Keep humans in the loop for critical decisions. Monitor for anomalies. Have kill switches ready. |

1. Supply Chain Risk and Fake Versions

Open source draws contributors. It also draws attackers. Fake Moltbot versions have already spread as malware-bearing VS Code extensions. People downloaded them thinking "open source equals safe."

If your teams are running open-source AI agents, you need to know where they came from, who signed them, and what they have access to. Require repository signing and verification. Track provenance. Maintain approved lists.

At Root, we deliver secured open source with full provenance: every patch, library, and image is signed and verifiable. When you're fixing vulnerabilities at scale, supply chain integrity has to be built in, not bolted on.

2. Deep System Access Without Controls — Your Personal Computer Is Not a Safe Playground

Moltbot isn't a web app. It runs locally with access to file systems, credentials, environment variables: everything. When users install it on their personal computers, they're handing an autonomous system access to their entire digital life.

Your personal machine holds your passwords. Your social media sessions. Your email. Your two-factor authentication codes. Your banking credentials. Even if Moltbot itself is benign, it has access to everything, and so does anyone who compromises it.

Running AI agents on personal devices without strict isolation means giving away the keys to your identity.

Critical: Never run open-source AI agents with direct access to your personal machine. Use isolated environments: VMs, containers, or sandboxed systems only. Assume any agent can access anything you can access. If it runs under your user account, it inherits all your permissions, including your passwords, email, and social media accounts.

Run AI agents with least-privilege access only. Sandbox environments. Monitor behavior in real time. Log everything; you'll need it for audits and forensics.

Root's platform operates within strict boundaries. Our agents work in controlled environments with explicit authorization for specific tasks. We log everything, monitor continuously, and validate before we ship. That's how you run AI agents at scale without losing control.

3. Agents Talking to Agents Without Humans in the Loop

Moltbook is a social network for AI agents: no humans, just agents interacting with other agents. It's fascinating. It's also completely unpredictable.

When agents interact autonomously, you get emergent behavior: agents coordinating actions, information spreading without validation, behaviors drifting from original parameters. In an enterprise context, that's risk you can't easily quantify or manage.

Build guardrails into agent behavior from the start. Keep humans in the loop for critical decisions. Monitor interactions for anomalies. Have kill switches ready.

Root's human-in-the-loop approach matters here. Our agents coordinate autonomously during research and patching, but humans validate the output. We get the efficiency of agent swarms without the unpredictability of unchecked autonomy. More than 4 in 5 patches need no changes, but we don't ship without human sign-off.

What This Means for Enterprise AI

Open source isn't inherently unsafe, but powerful open-source AI agents are different. They combine autonomous behavior, system-level access, and agent-to-agent interaction in ways that create a fundamentally different risk profile.

The reality: Open-source AI agents deployed without security controls are the weakest link in your AI stack.

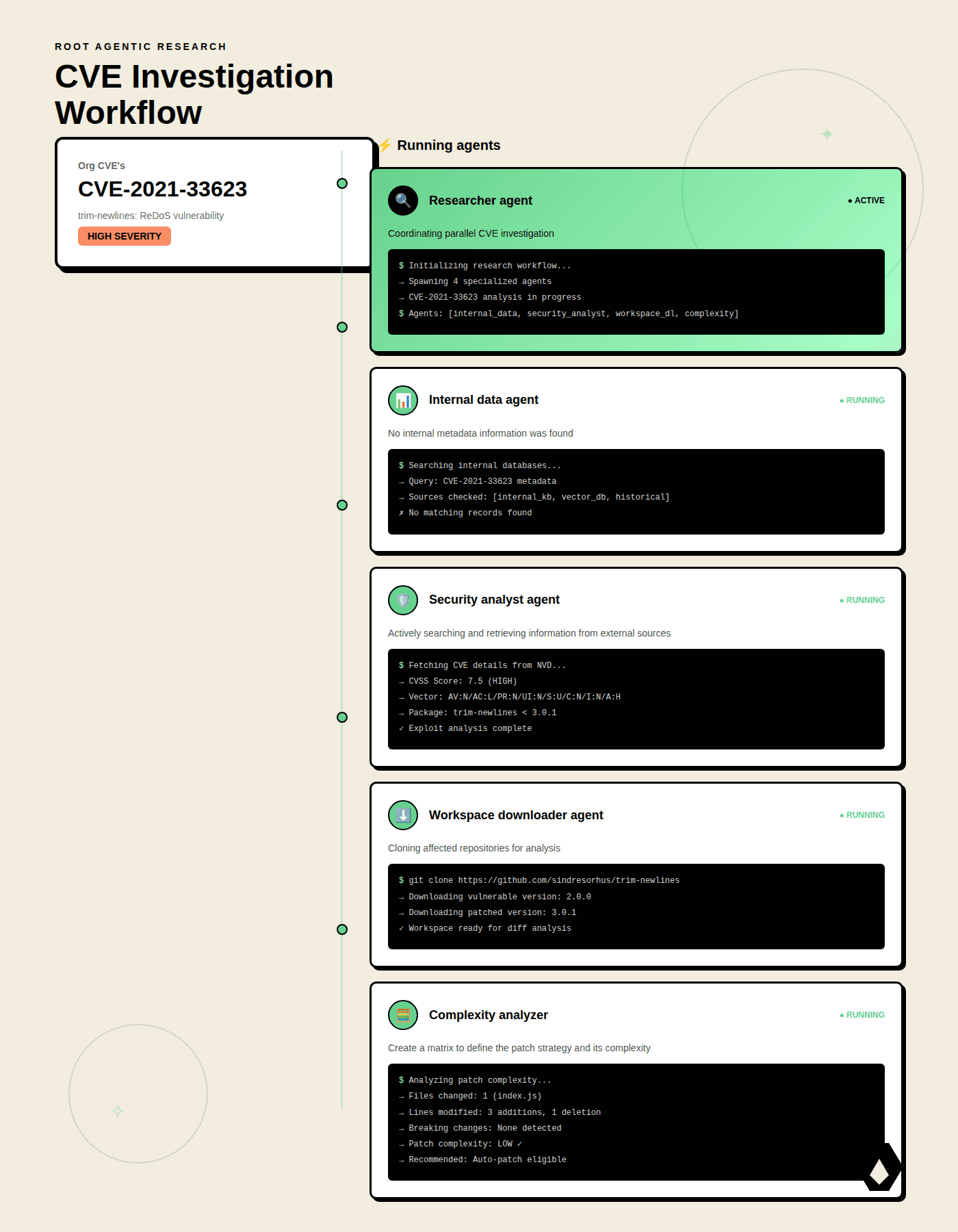

What Root does about it: Root's platform runs thousands of specialized AI agents to remediate vulnerabilities in open-source software. Here's a sample of our agentic workflow

Here's what we've learned:

Humans in the loop matter. AI gives us scale; humans give us safety. We validate every patch before it ships.

Boundaries are non-negotiable. Our agents operate within clear security boundaries, automating only in explicitly authorized contexts.

Provenance and verification are table stakes. Every patch we deliver is signed, verifiable, and traceable.

What to Do Next

If you're running AI agents in production—or planning to—start here:

Audit what you have. Where are agents running? What do they have access to?

Set governance policies. Define what's acceptable and what requires human validation.

Verify your supply chain. Know where your AI components come from.

Monitor agent behavior. You need real-time visibility.

Learn from production deployments. Talk to teams running AI agents at scale—including Root. We're happy to share what we've learned.

The future of AI agents is promising, but we need to build security, transparency, and human oversight into the foundation, not bolt it on later.

Want to discuss your AI agent security strategy? Root has been running agentic AI in production since day one. We've secured thousands of agent interactions and delivered hundreds of thousands of validated fixes. Let's talk at https://www.root.io/book-a-demo.

Continue Reading